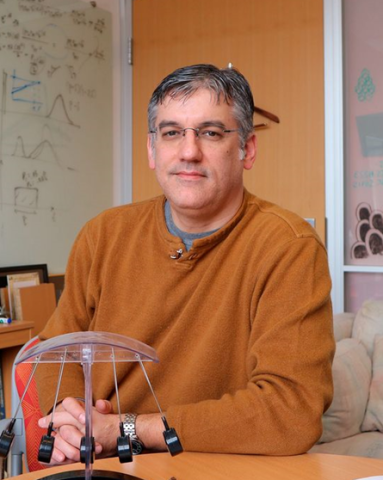

Christopher Monroe

Notice: We are in the process of migrating Oral History Interview metadata to this new version of our website.

During this migration, the following fields associated with interviews may be incomplete: Institutions, Additional Persons, and Subjects. Our Browse Subjects feature is also affected by this migration.

We encourage researchers to utilize the full-text search on this page to navigate our oral histories or to use our catalog to locate oral history interviews by keyword.

Please contact [email protected] with any feedback.

Credit: Brad Horn, National Public Radio

Usage Information and Disclaimer

This transcript may not be quoted, reproduced or redistributed in whole or in part by any means except with the written permission of the American Institute of Physics.

This transcript is based on a tape-recorded interview deposited at the Center for History of Physics of the American Institute of Physics. The AIP's interviews have generally been transcribed from tape, edited by the interviewer for clarity, and then further edited by the interviewee. If this interview is important to you, you should consult earlier versions of the transcript or listen to the original tape. For many interviews, the AIP retains substantial files with further information about the interviewee and the interview itself. Please contact us for information about accessing these materials.

Please bear in mind that: 1) This material is a transcript of the spoken word rather than a literary product; 2) An interview must be read with the awareness that different people's memories about an event will often differ, and that memories can change with time for many reasons including subsequent experiences, interactions with others, and one's feelings about an event. Disclaimer: This transcript was scanned from a typescript, introducing occasional spelling errors. The original typescript is available.

Preferred citation

In footnotes or endnotes please cite AIP interviews like this:

Interview of Christopher Monroe by David Zierler on March 29, 2021,

Niels Bohr Library & Archives, American Institute of Physics,

College Park, MD USA,

www.aip.org/history-programs/niels-bohr-library/oral-histories/46948

For multiple citations, "AIP" is the preferred abbreviation for the location.

Abstract

Interview with Christopher Monroe, Gilhuly Family Distinguished Presidential Professor of Physics and Electrical Computer Engineering at Duke University. Monroe discusses his ongoing affiliation with the University of Maryland, and his position as chief scientist and co-founder of IonQ. He discusses the competition to achieve true quantum computing, and what it will look like without yet knowing what the applications will be. Monroe discusses his childhood in suburban Detroit and his decision to go to MIT for college, where he focused on systems engineering and electronic circuits. He explains his decision to pursue atomic physics at the University of Colorado to work under the direction of Carl Wieman on collecting cold atoms from a vapor cell, which he describes as a “zig zag” path to Bose condensation. Monroe discusses his postdoctoral research at NIST where he learned ion trap techniques from Dave Wineland and where he worked with Eric Cornell. He explains how he became interested in quantum computing from this research and why quantum computing’s gestation period is stretching into its third decade. Monroe explains his decision to join the faculty at the University of Michigan, where he focused on pulsed lasers for quantum control of atoms. He describes his interest to transfer to UMD partly to be closer to federal entities that were supporting quantum research and to become involved in the Joint Quantum Institute. Monroe explains the value of quantum computing to encryption and intelligence work, he describes the “architecture” of quantum computing, and he narrates the origins of IonQ and the nature of venture capitalism. He discusses China’s role in advancing quantum computing, and he describes preparations for IonQ to go public in the summer of 2021. At the end of the interview, Monroe discusses the focus of the Duke Quantum Center, and he asserts that no matter how impressive quantum computing can become, computer simulation can never replace observation of the natural world.

Zierler:

This is David Zierler, oral historian for the American Institute of Physics. It is March 29th, 2021. I'm delighted to be here with Professor Christopher R. Monroe. Chris, great to see you. Thank you for joining me.

Monroe:

Thanks, David. Pleasure to be here and glad to chat.

Zierler:

All right. So, to start, would you please tell me your current titles and institutional affiliations? And you'll noticed I pluralized everything because I know there's more than one.

Monroe:

Yes, it’s a bit messy right now. I am the Gilhuly Family Distinguished Presidential Professor of Physics and Electrical Computer Engineering at Duke University. I'm also a College Park Professor at the University of Maryland, although my main position is at Duke, where I have recently moved. Finally, I am the chief scientist and co-founder of IonQ, a startup company that manufactures quantum computers.

Zierler:

All right. So, we have to unpack all of this.

Monroe:

[laugh]

Zierler:

So, you've retained your professorship in Maryland or what exactly is your affiliation at College Park?

Monroe:

I don't have tenure there officially anymore, but I have a good relationship with my Maryland colleagues and continue to be engaged with the community there. I've been at Maryland since 2007. They have a big community in quantum science and, even though I wanted to start something new down here at Duke, it was mutual in Maryland that we continue our collaborations. Usually, they give this type of position to people that maybe retire, or they're put out to pasture or something. [laugh] I can host students at Maryland, I can run grants through the university. So, I have a good relationship with everybody up there.

Zierler:

What was the opportunity that compelled you to move to Duke? Was there a new project that was starting that you wanted to be a part of?

Monroe:

Yes. I sensed an opportunity to push the field of quantum computing in a somewhat different direction than that of a conventional university department. For lack of a better term, I'll call it a “vertical.” Instead of developing the physics of all the interesting quantum systems from which you can build quantum technology (from silicon, solid-state quantum dots and superconductors to trapped atoms, ions and individual photons), I want to take one particular platform – trapped individual atomic ions – and build computers out of them by engaging engineers to build better ones that are smaller and higher performing. I want to engage computer scientists to learn how to write software for these things, and then applications specialists that can run new algorithms. So, you can see it involves many different fields and well apart from just physics. At Duke they have a history of going “all in” when they want to innovate or move into a particular research direction. They did this with biomedical engineering 30 years ago, having pretty much invented that field in a way that is now copied everywhere. So. it was very compelling for me to join a couple of my long-time colleagues at Duke, including the cofounder of IonQ along with me, Jungsang Kim, and Ken Brown – a physicist/chemist/computer-scientist who knows everything. The three of us are unified on this mission, and we are supported at a very high level from Duke. This looked like an interesting opportunity, and Duke is very good at supporting things like this, so I thought this would be a good way to make the vision happen.

Zierler:

Who are some of the strategic partners at Duke that are making all of this possible, perhaps in either industry or in the government?

Monroe:

Well, in addition to the tremendous support of the leadership at Duke, one obvious partner is Ned Gilhuly, a Duke graduate, private equity investor, and benefactor to the university. On behalf of his family, Ned underwrote my chaired position at Duke, and made it possible for me to move to North Carolina with my partner Svetlana. Svetlana has a PhD in high energy physics, but is also an expert in neuroscience, and almost every branch of the arts; she now works as Asst. VP of Research at Duke, and she is a natural at understanding and organizing almost any research activity on campus. Government partners include IARPA, NSF, and DOD, who have all bankrolled our laboratories throughout the years, and what we are building at Duke will likely be of further interest to those agencies. The industrial partner is easy, that's IonQ. They're located in College Park just inside the Beltway out of Washington. Jungsang Kim and I are cofounders there, and both Maryland and Duke have interesting partnerships with that company in that the intellectual property that is owned by the universities— when students, while doing research, come up with things that are very interesting to the company, IonQ gets the exclusive license rights to that IP with no royalties in exchange for a small amount of equity in the company. This is not typical at all – but both Duke and Maryland have this, so it made the transition easy. But there are other reasons, too. I'm part of two academic departments, ECE and Physics, and chairs of these departments, the deans of their colleges, the research VP, the provost, the president of the university, they are all 100 percent behind pushing this particular brand of quantum science and engineering. And we have an incredible facility: our laboratories are in an old tobacco factory in downtown Durham, the Chesterfield Building. We are part of the university although it doesn't look like one, and we will be branded as something more akin to a national laboratory.

Zierler:

[laugh] What a much better use of American ingenuity.

Monroe:

[laugh] Yeah. Interesting town, Durham. They don't demolish anything: old buildings, railroad crossings for defunct tracks, the old baseball park used in “Bull Durham.” They restore it all, and it's a beautiful city. It has a certain grittiness to it, but now with the Research Triangle, the Biotech Corridor, and anchored by Duke University, it is an amazing place. The students, the grad students and postdocs love it in Chesterfield because it's in the middle of the city action; there’s even a whiskey bar on the ground floor.

Zierler:

Chris, in addition to switching universities, you've also switched home departments. You were in the physics department at Maryland and now you have joint appointments in electrical and computer engineering. Now, obviously, for your field, you traverse all of these disciplines quite easily, but I wonder, substantively, if there are any significant differences in your research agenda as opposed to this departmental switch?

Monroe:

Yes, there are. I will say that I did have an appointment in engineering at Maryland and also previously at Michigan, where I worked in the early 2000s. But those were largely courtesy appointments. This one is more substantial. It's 50/50. And I see in Duke engineering something really different than physics. I think it's broader, they are more entrepreneurial, the students come in wanting to learn about systems. That's sort of a black magic word, systems. It's not really a science. But when you make a jet, when you make an airplane that has a gazillion parts, and the way they behave, there's a science to it that is not based on the individual physics of the components. So, I am very interested in bringing that aspect to quantum computing. I just hope they don't make me teach a digital circuits class, because I'm not so good at that. [laugh] Analog circuits, no problem.

Zierler:

[laugh]

Monroe:

I think the attitude of the departments at Duke in particular is very welcoming. They see that quantum science is somewhere between science (physics) and engineering, and both departments and colleges want to capture that.

Zierler:

Chris, a question we've all been dealing with over this past year: As an experimentalist, but somebody who works so closely with computers, how have you been affected by remote work? Have you been impacted negatively or positively by the mandates of social distancing or has it been more or less the same for you?

Monroe:

It's a little bit of a mixed bag. My real struggle with the pandemic and shutdown is the lack of one-on-one face time with students, just seeing them around, bumping into them, socializing with them. With remote work, of course, we have conversations over a video link but it's just not the same. I'm an experimentalist, so we work with our hands a lot, do things, goof around in the lab, break things, play with new toys. On the other hand, one of our new systems actually performed better during the lockdown. We spent several years building this particular quantum computer system. It’s not just an atomic physics experiment, but a deliberately designed system, packaged it into a 1 or 2 cubic meter isolated box housed in the laboratory. The idea is to leave that box closed for months. We perform all the manipulations we would use for a normal physics experiment except it was designed so that all of the adjustments that we need to make can be done remotely with automated transducers and actuators. It took a long time to design, and it was very expensive. The machine started to come online right at the tail end of 2019. As we were running it, we realized that we didn't need to be in the lab with the machine itself. And then, when the lockdown started in March 2020, the surprise was that this machine started working even better than expected, and it's because there were no vibrations in the building. Nobody was there. The HVAC, the air conditioning system, was rock solid because there was very little variation in the load, doors weren't opening, and nobody was walking around. So, with this system we were gathering great data —with all seven students and postdoc researchers working at home. But the more conventional science experiments were more tricky to manage. We had to turn them off for a little while, do some theory at home, and this was common in the field.

Zierler:

Chris, a snapshot question for now that I think is going to infuse a lot of what we talk about subsequently: For better or worse, as you well know, the media tends to portray quantum computing as a bit of a horse race; who's going to get there first, what's it going to mean? From your vantagepoint, is that necessarily a useful way of thinking about these things? And on an existential level, if it's true that we're still trying to figure out what quantum computing really will be good for, how will we know when we're there?

Monroe:

Very good questions. The field being characterized as a horse race is a little simplistic. I do believe competition in research is very healthy, even when it gets a little chippy. Early on in my career, back in 1990-92, in my field of atomic physics, there was also a horse race to cool the gas of atoms so cold that they condensed into a quantum fluid called a Bose-Einstein condensation (BEC). There was no known application and it didn't seem like there would be some technological spin-off. It still hasn't really happened. But there was new physics there. Each atom got so cold that its de Broglie wavelength was bigger than the spacing between atoms, so they became sort of one. And the laws of physics in this gas or condensate are completely different, like in a superfluid or a superconductor. Now that was a horserace. And, boy, I remember, as a graduate student with Carl Wieman, with Eric Cornell as a postdoc, we were in the middle of it. Carl saw this more than anybody else; I think it drove him. But it just seemed impossible to get our atoms a million times colder than they were to hit BEC. And there were five or six other groups across the world working on this, and it was hyper-competitive. And it was chippy. You'd go to conferences and people would say how far they've gotten, and everybody would roll their eyes. But you know—and I think everybody agrees with this—that it was good for the field. People felt the pressure and, for better or for worse, that made the field move faster. So, let’s fast-forward to now. I think with quantum information technology, it’s different in the sense that there’s a clear push for applications in the commercial space, for real applications, not just science. But we shouldn’t forget that there is science there. A quantum computer exploits the concept of quantum entanglement in a huge system. And that science is really murky. Much misunderstood phenomena in solid state physics is due to entanglement: it is too complicated to simply characterize these systems using that language. So, I don't worry too much about the horse race in the field because it will help to advance the science. Another positive aspect of the horse race is that we have companies big and small pouring money into this field. My corner of this field is doing pretty well in the race. But with a horse race comes some amount of hype. I think many scientists are shying away from quantum computing because there is lots of hype in the field: that quantum computers will replace regular computers and they'll displace Moore's law or the acceleration of conventional computers. I think this will ring true in the long run, but some people worry that the hype may do more harm in the long run. I don't know what to say about that, except that without a horse race and bold predictions, maybe we never get there. I don’t know that many academics see it this way.

Zierler:

What about the issue, to extend that metaphor of a horse race, in terms of those existential questions about what quantum computing is going to be good for? In other words, in a horse race we know who the horses are, and we know what the finish line looks like. So, in this race, it begs the question, race exactly to what?

Monroe:

Another good question! I made the analogy to Bose condensation in my own past. Here there was a race to get to this phase transition whose signature turned out to be as clear as day. The result was obvious, but not obviously useful for anything. It is still beautiful science, and sometimes we do science for its own sake. Quantum computing on the other hand, starts with its potential applications.

Zierler:

We have the mRNA vaccine for that reason so, you know…

Monroe:

There you go. [laugh]

Zierler:

Just for one example. [laugh]

Monroe:

Yes. Quantum computing has that flipped. Let me start over again. When we find an application that uses a quantum computer that we could not do using even very powerful classical computers, it will be obvious. It may not be as obvious as a new phase of matter though, and this also speaks to the difference between academic quantum computers and commercial quantum computers. In academic computer science, we have proofs. We have certain problems that are proven to be exponentially hard. When you increase the size of the input, the problem gets geometrically harder with that size of the input. That means that when you make the problem big enough, computers just can't tackle it. Many problems are called “hard” because of that. It turns out that quantum computers can make some hard problems easy. There are a couple of examples, such as factoring a big number into its primes. It's known that a quantum computer, if large enough, would be able to do that problem exponentially faster than any known algorithm on a classical computer. The problem is that the number of elements in the quantum computer and the size of the program is billions of times bigger than quantum computers available today. So, this application, unfortunately, is not just a few years away, it's probably a decade or more away. I think the more widespread applications in quantum computation will be those that you can't prove are better than any classical computation. That does not mean such an application will not be useful. If I have a device that works and I don't know why it works but it works, I use it – it is useful. In computer science sometimes that's called a heuristic. When quantum computers hit paydirt, it'll probably be for a heuristic problem—oh, say it solves the traveling salesman problem a little better than what we could do using a high-performance computer. Of course, a next generation high-performance classical computer might come around and beat the quantum computer. So, while the transition to quantum computers may not be as clear as a phase transition, people will pay for this. If they get a better answer to their pet optimization problem, like how to combine drugs to make a particular compound or to model some financial portfolio function that's really complicated, what do they care? As long as quantum computer maximized the portfolio better than what you could do conventionally, it'll get used. People will start paying for it. So maybe that's an economic phase transition. I don't know if this is better than a scientific breakthrough; it's different.

Zierler:

Chris, I want to invert a question I posed to John Preskill at Caltech. As a quantum theorist, I asked him what were some of the advances in materials and technologies that compelled him to broaden out the IQI into IQIM? In other words, why was he able to add matter to the enterprise? And so, for you over the past ten or twenty years, what have been some of the advances in quantum theory that have been most relevant for your research?

Monroe:

Well, one very important one was error correction. In the mid '90s when quantum computing was unveiled, there came this factoring algorithm I mentioned previously, invented by Peter Shor. When you look at Shor's algorithm, it's an amazing thing, it is a combination of number theory and quantum physics -- strange bedfellows there. It was clear the complexity of the factoring problem changed from exponential to polynomial when you used quantum information. But while Shor's factoring algorithm was very exciting, it relied on lots of analog computing concepts. The problem is, quantum systems have a continuous variable inside them, the weightings of the superpositions: the quantum version of a single bit can have any weightings of the states 0 and 1 – 80%/20%, 50%/50%, 63.2%/36.8%, and those weightings can undulate like in an analog computer. We don’t use analog computers because they're unstable. Errors accumulate, and it limits how far you can take the computation. At the time, many people thought that the whole field of quantum computing was doomed, because there seemed to be no way to make the system stable. There seemed no way to make an analog system “latch.” On the other hand, if digital or discrete classical systems fluctuates a little bit, they can be corrected through feedback: you measure the state of the system and simply bring it back. If a 5-volt signal droops to 4.8 volts, you boost it back to 5 volts; but if the other logic state is around 0 volts, then there is no ambiguity: this is called “latching.” Well, as everybody knows, when you measure a generic quantum system it changes. It loses its coherence and does not seem to be latch-able. Quantum error correction was discovered in the late '90s, and some people think it was more profound than the idea of quantum computing itself. The idea that you can extend the concept of classical error correction (codified by Claude Shannon in the 1940s) and use the same ideas in quantum information was revolutionary. You need more resources, you need more quantum bits or qubits, but you can, in fact, encode quantum systems so that they effectively “latch.” That, I would say, has been the biggest theoretical development. This was probably around the time that John Preskill got really interested, and he is known for his general ideas in error correction. Now, what about materials? Well, to me the “string theory” of quantum information science is the search for the topological qubit. It's beautiful physics, and at a very high level. A topological qubit is a natural system that does its own error correction, in a way. If error correction codes exist, why not posit that there's some type of material that has the encoding already in there? It seems like that would be a pretty far road along the path of evolution to get to that, but you can't rule it out. And maybe it doesn't exist naturally, but you can make it. Maybe you can synthesize a material that naturally has all the interactions to get this error correction to work automatically. There are hints at how you might make such a topological qubit do exactly that. But then you have to build many of them and get them to interact in controllable ways, and often the usual sensitivity to noise comes back in the game. But it's really beautiful physics. In condensed matter and solid-state theory, this is a very popular subject. Some other theoretical advents are on the application side: new algorithms. None have been as weighty or clean as Shor’s factoring algorithm, but there are new ideas on optimization—these are heuristics, once again, often guesswork. Here you guide a quantum state to do something and maybe it corresponds to a function you're trying to optimize. There's been a lot of work in that direction. Further research is what I would call “horizontal quantum research” that captivates most physics departments and scientific quantum centers: look at every new type of qubit that's out there and push them. Well, there's not a whole lot of such technologies. Right now, there are probably 7-8 physical platforms that are really interesting for one reason or another. Trapped ions, superconducting circuits, neutral atoms, quantum dots, color centers, single atomic-like defects in solids, silicon spins, the topological qubits. These potential platforms emerge slowly and there are new ideas. Materials science is a natural place to look for cool quantum effects, even if they don't amount to a new quantum computer. Quantum effects historically have usually led to interesting discoveries from sensors to our understanding of phenomena like superconductivity – a wonderful material phenomenon that has a very subtle microscopic underpinning that is not well understood at high temperatures. We still don't have a microscopic theory for that, and this is because the entanglement within is too complex. But this is the domain of quantum information – I believe this field will generate new technology, and also give us a better understanding of fundamental materials science.

Zierler:

Well, Chris, let's take it all the way back to the beginning. Let's start with your parents. Tell me a little bit about them and where they're from.

Monroe:

I was raised just outside out of Detroit, Michigan, and my parents were both raised in the city. My father is an actuary. He's also an armchair mathematician and physicist, and I was definitely moved by that. I was always very good with my hands. As a kid I used to take things apart, and I loved working on cars. Once I disassembled a carburetor on one of my parents’ cars: I just took it all apart, and I eventually got it back together, but it was a struggle. My father saw that I was decent at math, and I was good with my hands, and somehow, he knew I was going to be a physicist. I wasn’t pushed into it, but he saw that this was something I should study. My mother is a teacher of art (painting, mostly). They both played piano very well. Between them, I believe they played most of what Frederic Chopin’s wrote. So, when I was a kid my life was defined by cars, baseball, and music. [laugh]

Zierler:

[laugh]

Monroe:

And at an early stage, I knew that I was going to have a career in either music or something involving mathematics.

Zierler:

Chris, looking back, do you feel that you experienced Detroit while it was still in its manufacturing heyday?

Monroe:

Yes. Looking back, I think maybe the nadir of Detroit happened after me, but it was a down and out city in the '70s when I was young. My parents were both raised in the city and they spent lots of time downtown in the '50s. They went to University of Detroit, both of them, a very good Jesuit college. And they saw the city go through its transition in the '60s, the racial tensions and riots. I lived just outside of Detroit and there were auto factories everywhere. In almost every other house, somebody in the household worked for Ford or General Motors. One of my father’s biggest clients was the United Auto Workers. One thing that rubbed off on me is my love of gritty cities.

Zierler:

Chris, when did you get interested in science?

Monroe:

Like most youngsters, I started focusing on mathematics more than science. You're exposed to it every year, each year building on the previous. And I became very good in math but, also, again, I was good with my hands, so it made sense to push into science. So, I took as much chemistry as I could, but only a bit of physics because there wasn’t much offered. It was really when I went to college that things started to gel. Somehow, I got into MIT (in high school —out of 250 kids, I was ranked in the 80s). I think it was because of my coding with my father’s company, that I coached my little sister’s softball teams, worked on cars, and aced math. I loved it at MIT, I had such a great time there. It was intense. It was really hard. I didn't get particularly great grades, but I just loved the challenge of being in the big leagues.

Zierler:

Did you go in expecting to study physics? Was that the plan from the beginning?

Monroe:

No. In fact, I went in wanting to experience everything. So, I went around and around in different departments. I plotted a path in Chemical Engineering, Mathematics. For a full year, I studied aeronautical engineering. MIT Aero-Astro had an intro course called Unified Engineering - this was the main course you took for the whole year. It met two hours every day, killer weekly problem sets, and an exam every Friday, and finally a “systems problem” every quarter. It was a really hard class. I loved it. I took Unified Engineering for my full sophomore year and was even asked to be a TA for the next year. But something happened toward the end of that year. We were working on one of the systems problems—I forgot the details—it was some type of aircraft design with a very complex wing, a very complex aerodynamics problem. We were designing it so that it would support a certain amount of weight. And then, the end of the problem, after all math and modeling, they said that the wing can only support half the weight you designed because we had to apply a “safety factor” of two. And that really got me upset. I thought - don't we trust the physics? Why do we need the safety factor? I understand that you do need a margin of error, and indeed I would rather fly in an airplane where they had a pretty conservative safety factor. But it seemed so inelegant! It occurred to me that if I wanted to apply safety factors, I would do this later on in my career, but not in school. I learned right there, that such a systems engineering concept was very difficult to teach and learn, so I wasn’t sure I wanted to continue in engineering, at least in college. As it happens, that year I also took a course in quantum physics as a “science distribution” class for my presumed engineering degree. It was real quantum physics though, the first level they offered in the physics department. And this course was the pivotal point when I decided that I am going to study physics. I still thought I may well become an engineer later on. But now, entering my third year, I had two years to cram all those advanced physics courses. In my first term as a physics major, I took Jackson. That’s very high-level electromagnetism, as every physics graduate knows. I got a C. And now I do electromagnetism for a living…[laugh]

Zierler:

[laugh]

Monroe:

I sometimes tell my students about that C grade, when they are down on their own studies. When I started taking physics it felt much more rigorous than engineering, and it was very pure and beautiful. And I knew that in the future I may well be doing engineering, very applied, but while I'm in college, I wanted to learn the “real stuff” and then I could always add safety factors later. [laugh]

Zierler:

Did you always know that it was going to be an experimental work, or did you dabble in theory very much as an undergraduate?

Monroe:

I remember when I took a seminar course in mathematics in my freshman year, we met only one hour a week, and we did problems that were on the Putnam exam. The Putnam exam is this incredibly hard math exam, like the famous Moscow Mathematical Olympiad, lots of vexing problems from number theory. There are six problems on the Putnam, and you did really well if you got just one of them right. So, we did these problems all term, and over the entire term I think I only solved one problem – and not very elegantly. That course really slammed me because I saw my peers being so much better than me at it. It didn’t take me down, but I knew that I could not be a theorist and certainly not a pure mathematician - I just didn't have it. But there were so many things out there that I didn't know. Plus, I was always good with my hands and I needed to work with my hands. So, it was natural for me to want to be an experimentalist. In my junior year when I started in physics, I also got involved with a laboratory that does optics and laser science. Why? It was almost random. I knew that it was an interesting field. There was some theory involved but the main attraction was that you would build things. I worked in the basement, the MIT Spectroscopy Laboratory. This was one of those arbitrary decisions in life when you do something almost at random and then it guides the rest of your life. In my case, it really did.

Zierler:

What was so compelling to you about this work?

Monroe:

I don't know. I think I just loved the sense of owning something: you build it and it's yours, even if it is a noisy electronic circuit. I needed to be in control of what I did. Even though I was an undergraduate, low on the totem pole in that lab, there were certain tiny aspects of the experiment that I owned: I did this, I built this power supply, that was mine. Now I realize that it is trivial: you can buy those things. But then I just loved the idea of owning it.

Zierler:

Did you ever think about going into industry out of undergraduate or was graduate school always the goal for you?

Monroe:

Graduate school was not really the goal, especially at a place like MIT where most of my friends were engineers. They were going on to careers right after college. They had a good program where—many colleges do this now—where in five years you get both your bachelor's and master's in engineering, and then you graduate and work for Analog Devices or Intel or some other company. We didn't have that in the sciences, but I still interviewed for engineering jobs, maybe because of peer pressure. But in my fourth year, it was also clear that in physics most people did go to graduate school. Sometimes I think that my choice to go to grad school was out of my immaturity in the sense that it was easy to continue doing the same thing I had been doing – going to school. I was not ready to go out into the world, but at the same time, there was obviously a lot to learn. One thing I appreciate now about a PhD, is that by specializing in something, whatever it is, you learn a whole lot about everything. Students often don't appreciate that. They're afraid of becoming this very sharp needle of knowledge, and the first few years of graduate school can feel like that. But when successful graduate students look around, they can understand how others do their work, based on what they do for their own research. So, I guess I backed into graduate school because it seemed like it's what you were supposed to do in physics. I'm really glad I did. I learned a hell of a lot.

Zierler:

What kind of advice did you get about people to work with, programs to focus on for graduate school?

Monroe:

The one reason I chose optics and the atomic physics field was that I knew that I could hop into an industrial job right away, because optics and lasers are used in a great deal of advanced technologies. There was an engineering aspect of this. Much of the advice I received came from my undergraduate lab. The lead graduate student I worked with, Dan Heinzen, as well as Mike Kash and George Welch, were all veterans in the research world. My academic advisor Mike Feld was a great person who really took care of his students. They all suggested to me where the interesting programs are, so I applied to most of them. One was an up-and-coming place, the University of Colorado at Boulder, where I eventually went. I didn't know much about it – the furthest west I had been was Chicago. But in Colorado there were a couple of folks there that were doing neat things with lasers and atomic physics: Jan Hall, Dana Anderson, Carl Wieman.

Zierler:

This is a powerhouse group, that you understood right away?

Monroe:

Yes. They were better than Harvard, Yale, Stanford, or Caltech, in fact, but I’m sure people would dispute me on that. It’s fun to be in a place like that where you are always the underdog on paper. From the East Coast, CU perhaps wasn’t so well known, so this did seem like a risk. Carl Wieman had just arrived there from Michigan where he was unbelievably denied tenure a few years earlier. [laugh]

Zierler:

[laugh] Not a proud moment for Ann Arbor.

Monroe:

Right. Everybody knew better. Carl had some really interesting ideas. And Jan Hall, legendary already, the consummate laser builder, was there. I got to know Jan later on. He had two boys, and when they each turned 16, he gave them a junker car and their job was to fix it up and make it drivable. That tells you a lot about the guy. [laugh]

Zierler:

Was the presence of JILA and/or NIST sort of attractive to you right out of the box?

Monroe:

Definitely. I didn't really know about the details until I visited. They had a machine shop with eight full-time people. They had a full-time welder who could weld aluminum, and they had all these machinists. And I love machining. I was in the machine shop all the time working with those guys. And I thought that's how all graduate schools in physics would be. Boy, was I shaken when every other place I went to didn't have anything like that. At JILA we had our own glass blower in JILA (and there was another one in Chemistry, even). And with the NIST component, a full US national laboratory right there on the campus, CU atomic and laser physics was a big deal. Of course, that's why Carl Wieman, and Dana Anderson, and Jan Hall were there. But I didn’t know the depth of the place when I applied.

Zierler:

Chris, how did Carl become your mentor? What were the circumstances leading up to that relationship?

Monroe:

A good thing about a school like Colorado is that they don't see a whole lot of applicants from a place like MIT or Harvard, and Carl was an MIT undergraduate, so I think my application caught his eye, and he gave me a tour in the spring before graduate school. I saw his group, and it was a small group that was doing interesting things. The cold atom stuff that got him a Nobel Prize was his hobby.

Zierler:

[laugh]

Monroe:

He was doing precision metrology in atomic physics; very difficult experiments measuring tiny asymmetries in atoms. He was so driven, and he needed to have an almost impossible goal. As I said earlier, he needed the horse race; maybe that's what made him click. That drive was infectious to me.

Zierler:

How did you join your own interests with what the group was doing?

Monroe:

It took pretty much two full years for me to find my own there. That was tricky. In fact, after those two years, I left for the summer. I was going to quit grad school and interview for engineering jobs. I think I have hinted at the reason why: I wanted control of something. I was an immature young graduate student. There was an older grad student, Dave Sesko, and a postdoc, Thad Walker, on this project, they were great people. But I needed to control things, even though I was way too young for that. So, I told Carl in the spring of my second year that I'm leaving to go into industry. And I wanted to travel to Europe with a couple of college friends for the summer. And Carl said, "Ok. Well, when you get back from Europe look me up and we will get you back in the lab." I'm not sure why he said that, but it really struck me. And then, sure enough, in the middle of my Europe trip I'm thinking, God, what am I doing?

Zierler:

[laugh]

Monroe:

I still can't believe Carl would treat me the way he did because in his place I would probably have just let the student go. But when I got back, Dave Sesko had graduated, and Thad was on his way to a professorship at Wisconsin. I should say that Thad was a wonderful person to me, professionally. He had a big hand in “setting me straight,” but in very kind and subtle ways. So, upon returning from Europe, Carl wanted to start a new project and he gave it to me - alone. He gave me the whole lab and I worked with him one on one. Eric Cornell came a year later, but I started that lab on my own, and it was amazing! I loved it!

Zierler:

And what was the project?

Monroe:

It was a method of collecting cold atoms from a vapor cell. The conventional way was to make an atomic “beam” by sublimating atoms from a source and directing them into the chamber like a firehose. They were moving way too fast to capture, though, so you had to slow them down first with a counterpropagating laser “slower.” That is an extra step, very complicated, big vacuum systems, chirped lasers, lots more stuff. Loading directly from a vapor is much easier than from a beam. You just make a glass cell, and the atoms are everywhere but their average speed in any direction is smaller. You get more of them. It was a dramatic simplification in cold atom physics. That became my thesis, and we wrote a paper almost right away that is now one of my most highly cited papers, even after all the quantum computing ones, and it's now basically the source of all cold atom experiments. I also developed some of the theory on how the atoms get captured, the fraction that gets captured and all that, and how it depends on the control parameters like laser power and tuning. So, that third year of grad school was the perfect year for me. I really fell into things, and I got an appreciation of theory and using computational support behind the experiments. And I should also add, in that third year I wasn’t exactly alone. The second half of the year I was working with a JILA visiting fellow, a guy named Hugh Robinson. He was from Duke and he was visiting Leo Hollberg and others at NIST, and he spent time with Carl. So, he and I made the first atomic clock using cold cesium atoms. Hugh was a retired professor, and he pretty much taught me all I know about microwaves and RF. Hugh always said that electronic chips were filled with smoke, and the key was to keep the smoke inside the chip! I always remember that one. And then, Eric Cornell came the fourth year, and we dropped it into high gear and really started moving.

Zierler:

What did Eric bring to the project?

Monroe:

Eric and Carl, they're complementary in a certain way. They're both amazing physicists but in different ways. Eric leaned on me for most of the experimental stuff. He had never run a laser before - and here he was in a laser lab. But he had high-octane ideas. Like the quest for Bose condensation, what are we going to do with it and how do we get there? He is brilliant in that way, and at the time he had a fundamental statistical mechanics view of this phase transition. Once Eric gave an informal group meeting on how to phenomenologically introduce a nonlinear term into the Schrödinger equation to theoretically account for a weakly-interacting Bose gas. This was all off the cuff in 1991, and over the next decade this approach, essentially the Gross-Pitaevski equation, became the norm for studying Bose condensates. Not many know that Eric was the first to even pose this connection, as he didn’t publish it. In contrast, Carl was the consummate experimentalist. He maybe didn't have this innate sense of theoretical physics that Eric did, but when Carl looked at a signal, he would tell you what's wrong and what you must work on. He had this uncanny ability of finding the long pole in the tent; this came from his penchant for precision metrology, I suppose. I always thought, it was the ideal experience for a graduate student to be working with the two of them. These two guys were amazing! For about two years the three of us were a very closely-knit team, all on the same page. There were no ego issues, not a single one. And, yes, officially I was low on the totem pole, but it was still great. Things were happening and I felt ownership. I still had that immaturity, but they knew it and they wanted me to own the apparatus. It was wonderful.

Zierler:

Chris, intellectually, how did you carve out your own contributions in terms of presenting a thesis as your own?

Monroe:

I chose things to work on, and I was given remarkable autonomy to do so. Carl, Eric, and I each had distinct roles on the project, but there was also a symbiosis, in that we were all pushing on each other. This was an ideal team. We needed to get the atoms colder, much colder, and more dense. After dramatically simplifying how to produce cold atoms in the vapor cell through laser cooling and trapping, we had to turn off the light, because the light kept the atoms too hot. So, we turned to magnetic traps, no light there. I spent weeks and months dropping atoms into a magnetic trapping zone, focusing them with permanent magnets I bought at K-Mart, then more sophisticated “current bars,” wires wound on a complex form. One day Carl came up with the inspiration to toss the atoms upward instead of dropping them down – they would naturally get slowed by gravity as they approached their apex. We originally tossed the atoms up by accelerating a linear translation stage with retro-reflecting mirrors on them to create the requisite upward and downward Doppler shift on the trapping beams. Eric’s first few weeks as a postdoc were spent calibrating solenoids to measure their impulse – we need to get the stage up to 2 m/s in a few milliseconds. But the “bang” of the solenoid striking the translation stage caused the lasers to jump out of lock, so we had to send a pre-trigger signal to unlock the lasers. The stages were rough though, and the dragging on the ball bearings heated the atoms as they were tossed. Once when Jan Hall visited, he asked why we didn’t use acousto-optic modulators instead of this Rube Goldberg-like mechanical launcher. It was a very good question, so we took his advice. Getting to Bose condensation required another factor of 100,000 lower temperature. We turned to a bunch of exotic magnetic trap geometries, competing vigorously with the other groups doing magnetic trapping like Dave Pritchard and Wolfgang Ketterle at MIT, Bill Phillips at NIST in Washington. They were pioneers in magnetic traps, but they didn’t have atoms as cold as ours. It’s here that we really paved the road to Bose condensation, by loading atoms into a magnetic trap with great efficiency and maintaining high density. So many magnetic field coil designs and geometries – even a “baseball coil” whose wires were like the seams of a baseball on a sphere. Eric started plotting how we would evaporatively cool the atoms for the final step. The capstone of my thesis was actually a negative result – that cesium wasn’t going to cut it. We measured that the elastic collision rate between ultracold cesium atoms (required for rethermalization and evaporation) was not high enough for easy evaporation. However, we had a plan that Rubidium would be more promising, because there was a simple scheme to laser cool to extremely low temperatures based on some results from Steve Chu’s lab at Stanford. (In the end, Rubidium worked because of its more favorable collisional properties for evaporation, so Carl and Eric had that piece of luck working for them too!) It was an adventure, this zig-zag path toward Bose condensation, and it was among the most intense and fun times I have had, professionally.

Zierler:

Besides Carl, who else was on your thesis committee?

Monroe:

Jan Hall and Dana Anderson. I later became very friendly with Jan Hall, but during graduate school it was tricky. I spent some time in his labs with my graduate student friends, learning techniques. I remember visiting Jan in his office, and between stacks of books and papers, he showed me a circuit – not a diagram but the real thing, op-amps, caps and resistors on a printed circuit board. There was a 3D character to the circuit – the feedback resistor was positioned a certain way above the chip because it gave a particular capacitance that would shape the op-amp gain response in a certain way. Jan was a real artist when it came to circuits. He knew all the chip numbers, the OP-27 had great voltage offset characteristics, but watch out for current biases that should be compensated this way or that. After writing my thesis, I think Jan was reluctant to sign it. He thought that the prose was not up to his standard: I put in a few silly lines about circuits blowing up. Fortunately, Carl helped me get him to sign it. Jan was also on my graduate qualifying oral exam committee, early on in my graduate career. He asked me to write down Maxwell's equations on the board. I did that and proceeded to derive the electromagnetic wave equations near a conductor and so forth, and we got to the point where you should expect that a thicker conductor should shield radiation, but somehow, I was getting the opposite relation. [laugh] I knew it was wrong, but the math wasn’t showing it. And I heard myself saying incorrect things because that’s where the math is taking me. I think he laughed at that… So, this was the beginning of a healthy relationship with a professor when there is both fear and respect. [laugh] I still interact with Dana Anderson – he is a pioneer in bringing engineering to atomic physics. He founded a company called ColdQuanta, and he has been an inspiration in my own attempts to engineer a quantum computer based on atomic physics techniques; more on that later.

Zierler:

How much interaction did you have with Dave Wineland as a graduate student? In other words, when the postdoc opportunity came available, how smooth a transition was that for you?

Monroe:

I didn't know him very well. I certainly knew of his group. They were only a quarter mile away, and I used to go down there maybe every couple of months for a seminar. In fact, I remember hearing Eric Cornell give a seminar down there while I was in grad school. I think Eric originally planned to work with Dave Wineland for his postdoc, and I believe funding issues interfered. Funding in AMO Physics in 1990 was not nearly as good as it is now, but luckily Carl ended up picking up Eric. So, I didn't know Dave very well, certainly not personally. I just knew him by reputation.

Zierler:

What project did you join when you got to NIST?

Monroe:

This was a very hard transition for me because NIST is a government laboratory with more staff than students. So, Dave’s group had no students, but a lot of postdocs, a lot of good ones, through the National Research Council (NRC) Postdoctoral Fellowship. I had one of those fellowships myself because my graduate work was pretty visible. But there were eight other postdocs there, and I was the new one. That was tough, because there were several projects, and they all already had people working on them. So, my first year was a little odd. I did something a bit unconventional, and maybe this showed my immaturity in not wanting to change fields too much. I wanted to combine trapped ions with trapped neutral atoms. Dave Wineland was the ion trap God and his group really refined the art of ion traps for metrology. And I just came out of one of the most visible neutral atom cold atom groups, and this seemed natural. I should say that just as I left Carl Wieman’s group, Eric Cornell took a permanent position at JILA and he asked me to be his postdoc. He was going to push on to achieve Bose condensation. And the reason I declined was I thought they were 10-15 years away from BEC, so I wouldn’t see it during my potential postdoc tenure. This was 1992, and of course they observed BEC in 1995.

Zierler:

[laugh]

Monroe:

So, it was just three years. [laugh] And I'm convinced that, had I stayed on as his postdoc, it would've probably been two years, a year or two ahead of everybody else, including the big dogs at MIT.

Zierler:

Was your sense that Eric thought that this was a 15-year timeline, as well, or in his mind he was thinking this was going to happen much quicker?

Monroe:

I don't know. Probably not three years. But I think, this horse race, that was really what made things just a couple of years instead of 15 years, so the battle between CU and MIT was productive. Eric ended up hiring Mike Anderson as the postdoc, and Mike did very well, along with a phenomenal student of Eric’s, Mike Matthews. Anderson later founded a company called Vescent Optics, and his company has excelled. It’s fun to think about how things may have been different had I stayed with Eric. However, it was very good in the end. I really wanted to move into a different field, that is always a healthy thing.

Zierler:

Chris, some intellectual foreshadowing, where we're headed, of course, is the quantum work. Where is the seed, really? Where is this planted for you? Is it more in graduate school or your postdoc?

Monroe:

We started talking about how I was interested in building quantum computers for algorithms and to do computer science and so forth, but the ground floor of my work in quantum is atomic physics, controlling individual atoms with light. That's what I did in graduate school. Even though it was a different kind of atomic system, I became familiar with all of the machinery of atomic physics, experimentally and theoretically. And that obviously made it very easy to transition to quantum information and quantum computing using individual atoms. And, as it turned out, this was the luckiest thing in my life, that the field just dropped in our laps while I was postdoc at NIST.

Zierler:

So, after that first year, what clicked for you? How did it all come together?

Monroe:

I had my idea of how to succeed in this lab, as I said earlier. I was going to bring in my neutral atom experience and combine neutral atoms and ions. I spent a full year on that, along with a visitor from Brazil, Vanderlei Bagnato, who happened to be interested in the same thing. I got to know Vanderlei very well, and we succeeded: after a year, we juxtaposed cold sodium atoms and sodium ions in a single apparatus. And then we dropped this experiment entirely because it wasn't going anywhere. We should've published something because the idea of combining cold atoms and ions turned into a small cottage industry in the 2000s. However, I learned an incredible amount of technique in that year. We sensed the presence and motion of the ions by measuring their image current in a surrounding circuit – this was incredibly difficult, and I earned my radiofrequency credentials at this time. From Dave Wineland and fellow postdoc Steve Jefferts. They both came from the Hans Dehmelt school (Dave and Steve’s father were both postdocs with Dehmelt), which sees that everything in the world is a tuned LRC circuit oscillator. So now I also consider myself as coming from the Dehmelt school of RF. But after one year of my postdoc, I really had nothing to show on paper, so I went to Dave. He was a great mentor, and he immediately put me on a project that would trap ions very tightly. And when you confine anything really tightly its quantum levels are separated very far. And that means you can cool them to the ground state of motion, cool them to nearly absolute rest. And the foreshadowing here is that when you do that, that's the first ingredient you need to make a quantum logic gate. And nobody had really done that before.

Zierler:

Chris, can you explain the science behind that? Why do you need that?

Monroe:

With trapped ions as quantum bits, you need them to interact to make a gate, such as an AND gate between their two-level states. Trapped ions talk to each other through their motion, like two pendulums connected by a spring. And if you have a lot of thermal energy in these pendulums, the interaction is noisy, it washes out and becomes useless. But if you prepare the motion of the ions in the ground state, you remove all of the entropy so that it's in a pure quantum state. Then you can do pure quantum operations. So, in order to do a quantum gate between multiple atoms you need the atoms to be cold. In 1993, I'd never heard the term "quantum computer;" none of us had. We were making cold atoms because they were useful for atomic clocks. And we had further ideas on how to entangle atoms to make the atomic clocks even better. It turns out if you take even two atoms and put them in an entangled state, and then run them together as an atomic clock, the clock runs twice as fast. It has the same noise, but it runs twice as fast. That means the signal to noise is the square root of 2 better. So, you entangle atoms just to get a square root of 2 improvement in your clock. If you have 100 atoms, you gain by a factor of 10. This was pure physics, but it had an application to atomic clocks and metrology. In fact, a similar effect is now used to enhance the sensitivity of the LIGO gravitational wave detector. So, we were busy trying to make entangled states in '93 and '94, by making a very pure type of “squeezed” state, in the parlance of quantum optics. Steve Jefferts and I made this very tight trap that would allow the future control of trapped ion qubits. Steve had forgotten more about RF than I ever knew, and I was the laser guy, so we were a very productive team, and we got along wonderfully. The lasers, by the way, were much more challenging than what I had used in graduate school. We needed ultraviolet light, and this was produced by frequency-doubling dye lasers. Fortunately, we had Jim Bergquist, Wineland’s right hand man. Jim was one of Jan Hall's early students in the '70s. Amazing laser designer, super-nice guy, great athlete. Jim was a wonderful mentor on lasers and integrating them with the ion traps; I owe so much of my knowledge in laser science to him. So, in my second year of the postdoc, things started looking up. It was just fun, and we started publishing. The year 1994 was the formative year of my career. This is when so many things happened at the same time, between July and December. The International Conference of Atomic Physics (ICAP) meeting happened to be in Boulder that year (Dave and Carl were the local coordinators actually). A fellow named Artur Ekert gave a theoretical talk on quantum computation. At first, I thought this was just some fancy way to simulate quantum dynamics on your laptop, simply performing computations that applied to quantum physics. But no, this was much deeper -- it was about using quantum systems themselves to compute things in a completely different fashion. Ekert had just learned of Shor's algorithm, which was discovered that summer, and he told our community about it. Within weeks, two theorists, Peter Zoller and Ignacio Cirac, showed that you can actually build a quantum computer using trapped ions, and they showed exactly how to do it. It involved cooling ions to the ground state and performing operations. At the same time, Dave and I had figured out how to entangle trapped ions along similar lines as I discussed earlier. So, we had the experiment, and even accumulated data on that first quantum gate before the end of '94. It was so fast; I'd never seen anything like that. Right there at that moment, in December 1994, Dave hired me. He said to me over lunch one day, “I want you to stay on our staff. We're going to play around with this quantum computing stuff. We’re going to build the components of a quantum computer.” It was clear that that endeavor was going to have legs. That was an amazing year in my career, 1994.

Zierler:

Chris, just to freeze this moment in time, this is essentially the scientific and intellectual blueprint for quantum computing, and here we are 27 years later, we don't have to rehash what we said at the beginning of our conversation, but just a very broad question at this 27-year point: This is a pretty long gestation period that we're still in the middle of right now. What explains that overall?

Monroe:

That's a deep question. Silicon transistor technology was not unlike this. The first germanium solid-state transistor was made in 1947, and the first silicon VLSI chip in late '60s. That's only 20 years, and in our case, we're at 27. [laugh]

Zierler:

[laugh]

Monroe:

The comparison is not exactly fair, because silicon transistors allowed the fabrication of a computer whose theoretical underpinnings had been previously known for decades, if not centuries. Quantum computing, on the other hand, is an entirely different mode of computing, that was only discovered in the 1980s or 1990s. We still don't know exactly what the quantum killer applications will be. Industrial approaches to quantum computing have only recently begun, 10 years ago. I think this gestation period is about right -- 20 years of research, poking at things, figuring out what works, what doesn't work. This evolution is just slow. I have read that it is typically 20-30 years for new technologies to take hold – and quantum computing is not just a new technology, it is revolutionary. But I do feel the pressure. If it's already been 27 years, we better have something pretty soon. [laugh]

Zierler:

Chris, a more administrative question: As you were really getting comfortable at NIST and you became a staff member, what was the basic divide, both in terms of research, culture, and project expectations in terms of basic science versus applied science?

Monroe:

We were in a very special place, and this was partly due to the way Dave Wineland ran his group. It was a very academic group. Dave hired the highest-level postdocs that you could get in atomic physics. The graduate student at MIT I started out with, Dan Heinzen, was a postdoc with Dave just before I joined the group. Everybody wanted to be in that group. But this was also made possible by Dave’s boss's boss, the Director of the NIST Physics Laboratory, Katharine Gebbie. Katharine was an incredible woman, who unfortunately passed away just a few years ago. She was also a JILA/Colorado scientist in her day in the '60s; there’s a great poster of her climbing a canyon in the JILA hallway; she flew airplanes and drove fast. At NIST, her philosophy was—she told me this pointblank later when I moved to Maryland in 2007—to “hire the best scientists and then get out of their way." [laugh] For such a high-level government civil servant to say that is really amazing. She made Dave Wineland’s group happen. I think she also made possible the groups of Bill Phillips, Jan Hall and Eric Cornell. With Dave, those are her four Nobel Prizes, and I know that those four will tell you the same thing. I can’t say that in Dave's group at NIST we could do whatever we wanted. It had to be productive—but we could also do pure science. We were the research arm of the atomic clock division at NIST. Everything we did, so long as it involved atomic ions, had some application to atomic clocks. There were other projects. John Bollinger, another of the staff members in Dave’s group, pioneered a world-class experiment that controlled hundreds of atomic ions spinning in a different type of ion trap geometry called a Penning trap. With Steve Jefferts, Brana Jelenkovic, Amy Newbury and others, John figured out how to cool positrons in an atomic ion trap and how to produce a thin single atomic layer of atomic ions. What did this have to do with clocks? I don't know. It didn't matter. It was pure scientific work. Other examples: we can hold onto these trapped ions for a really long time, and we can sensitively probe forces of nature that maybe have no underlying accepted theory. What if the direction of a spin of an electron has an absolute dependence on whether it's up or down in space? What if the quantum wave equation has a nonlinear term in it? There's no theory for why that should happen. But you can test those theories to very high precision with these trapped ions. I guess you could argue that has something to do with clocks, because if clocks are sensitive to that then you're going to need to know. So, it was just such a great situation, a great mix of pure science but also very hard-core experimental technique. That’s the beauty of metrology – you will always find science by measuring the next digit. Our quantum computing project was actually closer to the atomic clock mission, because we were engineering entangled quantum states to improve the clocks. We didn’t call them quantum logic gates, but that is exactly what they were.

Zierler:

Chris, being at NIST for eight years, did you gain an appreciation for how the federal government supports science that's been useful to you in subsequent years?

Monroe:

I should say yes that I appreciated that our group was part of the federal government, and indeed we had to acquire funding from various other federal agencies. But the answer to your question is not really; I wasn’t really paying much attention to the fact this was a federal lab, because of Dave’s (and Katharine's) leadership style. We were doing pure science, and it could have easily been a university group. Of course, our work was also funded by other federal agencies, like the NSA and DOD.

Zierler:

You were sheltered; you didn't really get exposed to all of that?

Monroe:

Those few years were one of the most productive periods of my professional career. Because we had this apparatus and nobody in the world did. So, we just went to town – we had good funding and amazing postdocs and while we had to get funding, there was almost no competition. But also, I rarely had to worry about government bureaucracy. It was an academic group. That said, I asked Dave when he hired me, to be involved with the writing and securing of proposals. This was mainly the NSA and DOD, and in those days, it was not onerous –they sent the cash, and just asked for a 2 page email every year summarizing what we did. Wow, have things changed.

Zierler:

What were the circumstances for you joining University of Michigan?

Monroe:

I was a postdoc for two years, and then six years on staff at NIST, which is roughly the equivalent time one would spend as an assistant professor junior faculty member. I went to Michigan after 8 years at NIST, partly because it was clear that this field had taken off. Funny, when Dave first hired me, he told me, "Well, you know, if this quantum computing stuff doesn't work out, we'll go back to making clocks." [laugh] This would have been fine. It was great to work with him and his group. But obviously, the quantum computing stuff did work out. Nevertheless, it was an extremely risky move, leaving NIST. This reminds me of a time in '96 or '97. We were toying with additional quantum manipulations of our cold atomic ions: we put an atom in two places at the same time, in a state akin to “Schrödinger’s Cat,” and the way we did that turned out to be the way that everybody does gates nowadays. It was a refinement of the Cirac and Zoller scheme that was cleaner and less error-prone. I do remember clearly the day that I walked into Dave's office said, "Dave, if we could only get the laser force to depend on the qubit state in this way, then we make this Cat state." I added, "But I don't know how to do it." And he said, "Oh, but you can do it by controlling the polarization of the laser beam." I mention this to note that we were on the exact same page, and it was amazing! I've never had a scientific interaction like that, and even that day, it's so memorable. I don't think Dave remembers it after I reminded him, but it was just amazing. An incredible eight years! I felt terrible leaving NIST, but the reason I left is that we were the only ion trap group really in the US using these cold ions to do these quantum experiments. There was not a single university group doing this, partly because it was thought to be too expensive. Before Dave hired me, I did interview for a few university posts, and the departments – especially those with a presence in AMO Physics – would pepper me with the question of “how could you afford to compete with Dave Wineland.” That was ridiculous, because there were dozens of cold atom groups, and they should have been asked “how they would compete with Wieman/Cornell, Chu, Phillips, or Pritchard/Ketterle.” So, I had a bit of a chip on my shoulder when trapped ions were ready to stick out, and Michigan was all for it. I went to Michigan with nothing but an empty lab. And the first few months I remember I was buying things, but the lab was empty. I'm thinking, my God, what did I just do? I left this group at the pinnacle of their production, and they kept going on of course and I would have to compete. But I think in retrospect it was good thing, because now there are lots of university groups doing this.

Zierler:

You came on with tenure?

Monroe:

Yes.

Zierler:

And was part of the package that you would be supported with the resources that you felt you needed to build up your own group?

Monroe:

Yes. That's typical. There was a so-called start-up package.

Zierler:

I mean—let me rephrase. It's typical, of course, but as you describe it, you need something pretty good to go to be convinced to leave what you have.

Monroe:

Yes. There were enough resources to do what I want, and indeed this type of research was expensive. But startup packages are in the noise compared with the future opportunities to get funding. (That is a good message for young faculty.) It is important to note that Michigan had an existing community of optical scientists and atomic physicists. Michigan was a center for pulsed lasers, high-energy lasers with very fast pulses. We don't use such ultrafast lasers so much in the field of cold atomic physics. But Michigan had a great history in atomic physics, and also a new building with beautiful new labs. Plus, my wife and I had two young girls at home, and we were moving back to where I was raised and where my parents lived. My daughters became very close to my mother and father, this was a wonderful aspect of the move. Professionally, it was incredibly risky, but I'm glad I did it. I think it opened up the door for more universities and faculty to move in this direction. And nowadays this type of research is not so expensive at all. The lasers are a lot easier, the techniques are out there, it is fertile research ground, so we now have dozens of university groups doing this kind of work.

Zierler:

Chris, the opportunity to build a lab from scratch obviously invites existential questions about what you want to do. So, to what extent was that an opportunity for you to switch things up or did you want to continue essentially what you were doing but do it in a different place?

Monroe:

It was easy to do similar things, because nobody else in the world was doing it. Plus, I was using a different atom, a different suite of lasers, a different approach, or so I thought. My closest colleague at Michigan, Phil Bucksbaum, a luminary in ultrafast lasers and now at Stanford, told me, "Well, in a few years when you're doing something totally different than what Dave Wineland is doing, then...” And I didn't hear anything after “then” because I was thinking, what's he talking about? My group is the first university group doing trapped ion quantum computing, so of course I'm going to do very similar things to what I was doing at NIST—I'm going to compete head-to-head with Dave. I expected that, and that's fine. That's what science is. And if there's no competition, then there’s no progress and no interest. In the end of course, I moved in several different directions, as Phil predicted. It was risky and fun. I said earlier about wanting control of things. Now I had 100-percent control over what was going on, although at NIST I pretty much did as well. If I wanted to do something different at NIST, Dave Wineland would have let it be, I think. And I’ll admit that it was clear that I was in Dave’s shadow somewhat at NIST. In any case, I wanted a culture of more students, maybe not just all postdocs. Looking back, it was indeed a tricky time. I remember thinking, what the hell, just go for it, having this feeling of reckless abandonment in designing your research.

Zierler:

And you were teaching at CU Boulder the whole time you were at NIST, so slotting into a professor's life was not so much of a transition for you.

Monroe:

Actually, I don't think I taught once at CU. I had a lecturer position, so what that means is that I can take students, graduate students, for research. I did take a couple of graduate students, Brian King and then David Kielpinski from the University of Colorado. But I didn't teach classes there, unfortunately.

Zierler:

So, you did have to get into lecture mode?

Monroe:

Oh yes. I love teaching, even though at the time I had never formally done it in the format of a classroom. Research is the pinnacle of teaching, although we don’t get university teaching credit for that. Teaching is so fun, but classroom lecturing is a lot of work. I love the performance part of it. The preparation, not so much. [laugh]

Zierler:

What kind of classes did you teach initially at Michigan?

Monroe:

At Michigan, they had the big classes with recitation sections, those were all taught by faculty, as many research-intensive universities do. I did several of those. That was a lot of face time, twelve hours a week, but not a whole lot of preparation, because different sections of the class were taught by different people and they all were centrally coordinated - the schedule and the plan for the class. This was good for younger faculty like me. But I also taught a few other courses—my favorite class was the physics of music. Oh, that was great! That class did take a lot of preparation, 30 or 40 hours a week outside of lectures. Not enough hours in the week. But I just loved preparing for it; as a classical music junkie, I can find many examples of almost any auditory effect in some obscure symphony or sonata. The class was not for the physics majors; it was open to the whole campus. So, you had to temper the math down quite a bit. But it involved perception of music, the scale system, how instruments work. And physics department had a great demonstration lab staff. They had the most amazing demos, and we would spend time assembling some type of instrument and measuring equipment, like wine glasses and oscilloscopes and a time-lapse camera to view the vibrations. Beyond that, I also taught introductory quantum physics for undergraduates, and introductory courses on modern physics and waves.

Zierler:

Now, were you the inaugural director of the focus group, the NSF FOCUS Group?

Monroe:

No, no. That was Phil Bucksbaum. He and Gerard Mourou were the initial movers behind the NSF FOCUS Center. When Phil left for Stanford, I took over for the last couple of years.

Zierler:

Now, was that wrapped up in your decision to switch departments to EE in computer science?

Monroe:

Partly it was because a lot of the laser technology was in the college of engineering at Michigan, but also some of the Michigan computer scientists, like John Hayes, Igor Markov and Yaoyun Shi, were getting involved in quantum computing, and they wanted a little more voice in quantum. But that was a courtesy appointment, I wasn't really active in the engineering department. One thing that did come out of Michigan in my work is the use of pulsed lasers for quantum control of atoms. Nobody was doing that, and if I hadn't been at Michigan, I probably never would have done it either. A pulsed laser is now the main laser we use these days in trapped ion quantum computing. Such lasers are very stable and don’t demand much attention, unlike the conventional continuous-wave lasers used for cold atomic physics. This is because such pulsed lasers are made for the silicon industry for lithography and semiconductor fabrication. At Michigan I got up to speed with what these lasers do and how they can be used, just by hanging out with pulsed laser jocks.

Zierler:

Again, to go back to this foreshadowing question, was it at Michigan where you found yourself increasingly devoted to quantum computer questions in and of themselves, and not the sort of research areas that were peripheral and formative to later interests?

Monroe: